10 min read

User research is a core component of a successful product design process. It gives you an understanding of user behavior and the problems users have, and allows you to test out your solutions or validate a hypothesis.

The goal of user testing is to identify any usability problems, collect qualitative and quantitative data, and determine the participant's satisfaction with the product. Usability testing lets the design and development teams identify problems before they are coded. The earlier that issues are identified and fixed, the less expensive the fixes will be.

We can all agree that usability testing is a highly valuable practice. However, a lot of us tend to not do it. Why is that? Some of the reasons I hear:

- Time: The time it takes to recruit, schedule and run the tests. (In fact, one thing that can deter me from usability testing is the time and effort it can take to recruit and schedule.)

- Money: Paying participants incentives or paying third parties to manage and run tests for you.

- Fear: Fear of being proved wrong, or fear of introducing an influx of changes late in the development cycle.

But objections aside, testing must be done. I joined Mesosphere in October and worked with the team over the first couple of months to implement a system that would help automate and encourage (or force) us to run usability tests every two weeks. This is how we do it:

- Heavily inspired by Steve Krug's Rocket Surgery Made Easy

- Set up an opt-in email list and form, and start recruiting immediately

- Use a tool like Intercom, Mailchimp or Pardot to help manage and email contacts

- Use a tool like PowWow to help schedule sessions

- Offer incentives in the form of gift vouchers ($50-$100)

- Be flexible and run both in-person and remote tests

- Keep reports to the point and call out the top flaws so they'll be fixed

Recruiting customers and users

Mesosphere is a B2B company. Our Enterprise DC/OS product serves some very large customers, and our open source DC/OS technology reaches many, many more users. So this is a good place to start.

First, we set up a Google Form enabling people to "opt-in" to UX Research. The idea here is that if they opt-in, we have permission to email them about every upcoming user testing session. We can also reach out to them for other user research needs, such as surveys, prototype feedback, interviews, and field visits.

The form captured people who were interested, but we had to let them know about this form somehow. We setup a CNAME for uxresearch.mesosphere.com that pointed to the form. Then we set up a series of inbound links from the following sources:

- GitHub readme

- Social media (Twitter, Facebook, LinkedIn)

- Our website

- Meetups

- Public Slack channels

- Zendesk/Support follow up emails

We worked with other teams at Mesosphere (sales, support, marketing and product) to refer contacts and fill this pipeline.

The most effective trigger we set up was with Intercom. Intercom is a service for communicating with users of your website or product. For every user that signs up and uses the product at least three times, we send them an email that looks like this.

If you have an established user base, this works great. What if you don't? Here are some recommendations for attracting testers:

- Social media

- Weekly newsletter subscribers

- Friends

- Craigslist/TaskRabbit

- Relevant Slack groups

- Facebook groups

- UserTesting.com

Evolving our recruiting Form

The Google Form and spreadsheet worked well for a the first couple hundred entries, but after a while it got hard to maintain: we needed a better way to manage emails and lists.

Our sales and marketing teams are big users of Salesforce, so we worked with them to integrate with it. We now use Pardot to keep track of all our "UX Research Leads" via segment lists. This is a really powerful tool as it enables us to tap into all the information Salesforce stores about our customers.

Here's how we switched from Google Forms to Pardot:

- Create a new "UX Research" list in Pardot

- Imported all prospects from the spreadsheet

- Created a new capture form that is submitted to Pardot

- Changed the CNAME to point to the Pardot form

Now we can use the data already available to us in Salesforce and create new segments (e.g., filter where city = "San Francisco". Then we can use Pardot to send emails to these lists to let them know about upcoming sessions.

Using Salesforce makes life a lot easier for us as it is a service our team was already using. However, if you don't use Salesforce, here are some alternatives:

- Keep using Google Forms and Google Sheets

- Use Intercom to manage your users, segments and emails

- Use other ESPs, such as Mailchimp, to email users

The full recruiting flow now looks like this.

Scheduling user testing sessions

The design team has agreed to set aside every other Thursday to run user tests. We have five slots, each lasting one hour:

- 10 a.m.

- 11:30 a.m.

- 1 p.m.

- 2:30 p.m.

- 4 p.m.

These are set and give us a 30-minute buffer between each test to reset and prepare for the next. We believe in Nielsen's law of diminishing returns: Five tests should be enough to highlight the main issues.

We use PowWow to help us schedule the tests. PowWow is great, as it enables users to choose a time that suits them; cuts out the back and forth of emailing to figure out a time; adds the slots to your calendar; and followes up with email reminders.

However, we've found that there is still a considerable drop-off rate, so it's good practice to follow up with a personal confirmation two days before.

Our goal is typically to have five users on site. If we can't do that, we then target remote customers. Given that there are always no-shows or last-minute cancellations (at least always one of five), we have an internal backup list of interested teammates. So if someone cancels at the last minute, we'll put the word out on Slack so that the time doesn't completely go to waste.

We then document the schedule in our wiki and maintain a #user-testing Slack channel for full transparency.

Getting help with scheduling

Recruiting and scheduling takes up a lot of time, so our team sought assistance from one of our executive assistants who's masterful in managing calendars. Thankfully, this person was able to allocate some time each week to running this for us, and even optimized the process.

A good schedule coordinator will take care of:

- New recruiting channels and working with other teams to fill the pipeline

- Scheduling each of the available slots every two weeks

- Confirming users are still going to show up

- Welcoming them on the day

- Following up after with gift cards

- Making sure our user testing lab was reserved

The result is that the design team spends more time on the designs and script.

Preparing for the tests

Each user testing day has a "Test Lead." It is this person's responsibility to run the tests, but they can (and are encouraged to) have others help—either by having another designer run a session, or by having a developer or product manager sit in.

The test lead usually spends some time with each of the designers or product managers in the build-up to the test to outline what it is they want tested or major questions they need help validating. These might be:

- Test work that has been built (dev and production)

- Test work in progress (prototypes)

- Test hypothesis and value (interview)

The product designers help put together a script for each of the features they want tested, and the test leads makes sure they understand it so they can run it. The script should not be seen as word tasks. Think of it as more of a guide. It is definitely OK to jump around and skip questions. We never get through everything in a test.

Note: Preparing a prototype for user testing often requires a bit of additional work to help with the story and tasks you are setting. So it helps to think about the story and flow when you begin the project.

Running the tests

On the day of testing, we greet the users, have them sign a waiver and bring them into the testing lab.

In the lab, we've already set up a MacBook that has tabs open for everything we plan to test. We use ScreenFlow to record the sessions and hook it up to a big screen so we can watch. We have no more than two people in a room: the test lead and either another designer, product manager or developer. Personally, I love when developers join as they get to see problems in real life and are then sold on fixing them.

Remember, most people haven't done this before and don't know what to expect. We start off by making the participant feel comfortable. Some of the things we'll say include:

- We're not testing you, we're testing our designs, so nothing you do is wrong.

- Think out loud as much as possible so we can understand your thought process.

- What websites to you like to visit daily? What news did you read this morning?

A couple of tips for conducting a successful test include:

- Focus on behaviors, not opinions

- Answer questions with questions

It's good practice to finish by asking them if they have any questions, and if they could wave a magic wand and have any feature today, what would it be? This helps us build a list of No. 1 feature requests, and makes the user feel good that we're listening to their feedback, without going down a rabbit hole of feature request after feature request.

After the test, remember to take a photo of the users, say thanks and send a gift card (we typically offer $50-$100 Amazon gift cards depending on whether it was remote or on-site). This is also a good time to ask if any teammates would be interested in coming next time, or to schedule a field visit at their office.

A Note on Remote Tests

Remote tests are also valuable. We prefer in-person because there's less reliance on technology and it's easier to get to the comfortable tipping point of the interview.

Some of the things we'll do differently for remote tests include:

- Sending a waiver to sign via RightSignature

- Conducting the test via Google Hangouts

Analysis and report

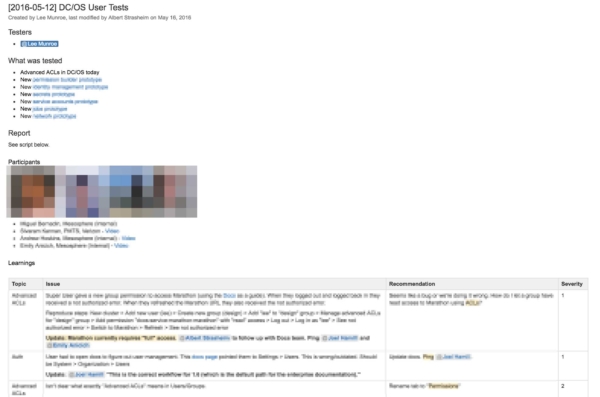

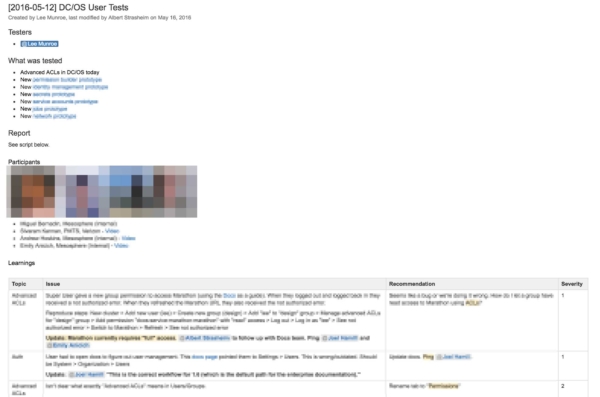

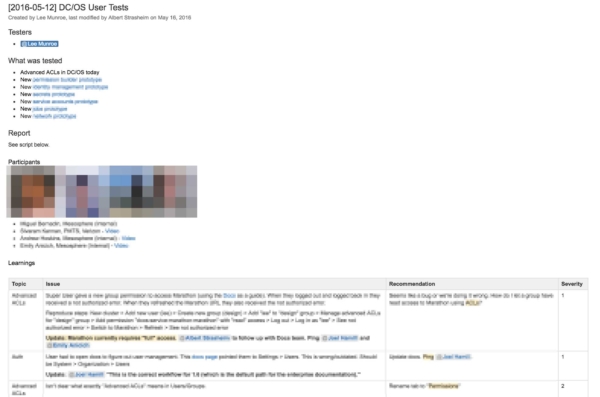

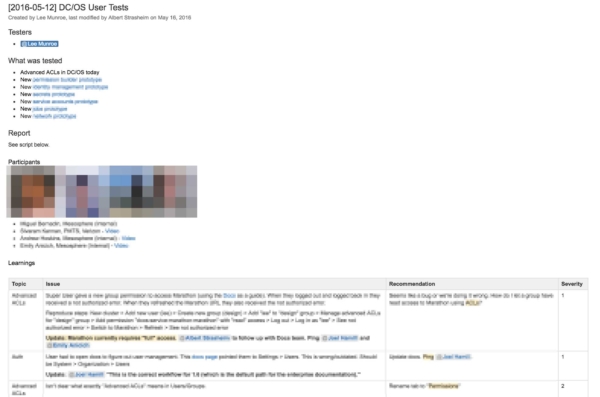

After the entire process has concluded, take your raw notes, create a report—try to keep it brief—and highlight the main issues. Our reports outline the top issues, and we assign a priority to them:

- Critical bug that needs fixed now (e.g. form doesn't submit)

- High priority (e.g., no one understands how to do something)

- Mid priority (e.g., took more effort than expected to do something)

- Low priority (e.g., you notice a hover style missing for a button)

Then we share this report in our wiki. It includes:

- Who was tested (photos, names, titles, companies)

- What was tested (links to prototypes/designs)

- Topic, description of issue, recommendation on how to fix, priority

- Other notes

- Links to videos (stored in Google Drive)

We don't create a highlight reel, but I've seen this work well before. Realistically, no one has time to watch all the tests. So if there's something in particular you want to make sure is communicated and fixed, it helps to go back over the videos and pull out small clips of the user struggling or the bug you noticed.

We'll then share the report to those who are interested and record everything in the wiki so discussion happens through comments in a central location.

Followthrough

The most important thing is to take action on what you discover. There is no point doing all this if you don't actually make the changes to make the product better.

If you can show a clip of someone struggling, it is an easier sell to developers, stakeholders or whoever it is needs convincing. Also, as I mentioned before, having developers and product managers sit in on a test helps them have empathy.

Conclusion

Testing certainly isn't straight forward. It takes time and effort. But every session we run brings so much value. As your company and design team grow, you will likely have dedicated user research roles to help handle this, but it is always beneficial for all product designers to be involved in the process.

And with all of that being said, if you're an engineer and would like to be involved in our usability testing for early feedback and previewing new features, please sign up to our UX Research list.