Intel on Thursday

announced its "Cloud for All" initiative to help companies of all shapes, sizes and technological abilities adopt cloud computing. Among the pieces of its new initiative are strategic investments in "software-defined infrastructure"; open standards and solutions; and two 1,000-node test clusters, hosted in conjunction with Rackspace, to let the community test out new features and overall performance.

Mesosphere also believes that the range of technologies broadly defined as "cloud computing" should not be limited to companies such as Google, Facebook and Twitter, which is why we're happy to be a strategic partner with Intel on this and other software-related initiatives.

And because there's often no better way to learn about something than to hear it explained by the folks involved, we spoke with Intel Principal Engineer Das Kamhout about the new initiative and about Intel's take on the new generation of cloud technologies overall.

Here's what he had to say.

Among the reasons Intel gives for launching "Cloud for All" are complexity, lack of scalability, and gaps in open source enterprise-grade features for cloud deployment. Can you elaborate on what those gaps are and how Intel is addressing them?

The Cloud for All program is intended to cover all types of use cases, although the specific announcement today focuses heavily on OpenStack, we also see the need to help drive hyper-scale computing and bring Google-style containers to the masses.

Historically, enterprises have had a hard time even getting to the point of self-service and automation around technologies like virtual machines -- and they have been around for a long time. So we see a lot of barriers that we really need to tackle as an industry to bring cloud computing and hyper-scale to the masses.

One of the barriers comes down to high availability of tenants in a shared environment, meaning how to make sure the users' infrastructure is always on. While some enterprises have shifted their application models to assume failure and have switched to microservices and containers, the huge portion of applications and infrastructure today are very much expected to always be on.

When companies have moved to cloud-native architectures, another key challenge becomes high availability of the cloud services themselves. If enterprises are building automation around APIs, we have to make sure those services are always on so the applications that connect and rely on them don't suffer.

On the scaling side, we see a pretty wide spectrum. Although most OpenStack clouds today have not gone past a couple hundred nodes, Intel is driving to make it easy to scale to 1,000 nodes or more as those environments mature.

And in general, one of our goals with Cloud For All is to allow people to lower the total cost of ownership for their infrastructure. I come from an HPC background, and the way we did that in grid computing was to build massive resource pools so there are fewer islands of capacity. The more we can move toward huge resource pools, the better IT shops can share capacity, increase utilization, and lower the cost of running their infrastructure.

And we don't want people to need Ph.Ds. to be able to deploy and operate a cloud. We want it to be simple and beautiful and to mask that complexity.

This sounds a lot like what Apache Mesos, and Mesosphere's Datacenter Operating System, are proposing for users. What is the connection between Intel, Mesos and Mesosphere at the moment?

We partner with Mesosphere and we also work directly on Apache Mesos. We strongly believe in a few types of solutions that are necessary to hit the broad market for people running datacenters at scale. Where we like Mesosphere is its dual-level scheduling, which allows for a lot of things to run on top of a massive resource pool. The fact that Apple and Twitter are using it, its similarity to Google Borg, and how it abstracts a datacenter resources into a single pool are all things that Intel is excited about.

Intel has people working on Apache Mesos, and we have some cool announcements coming out next month around driving utilization and performance. There aren't many schedulers out there, and Mesos is definitely a sweet spot for massive scale and is built for hybrid clouds.

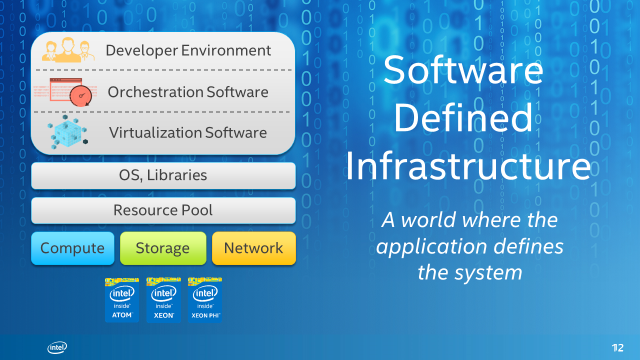

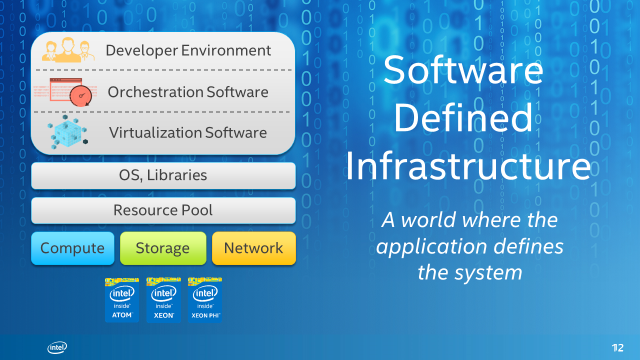

We basically see all of the technology components in the datacenter being delivered and optimized as software. It used to be that people would have giant storage environments that would scale up. Now, if you look behind scenes of cloud storage providers, it's massive scale-out storage environments. In networking, telcos and cloud providers are bringing the logic and brains into the nodes, meaning the performance and capabilities of the servers have to drive all those functions.

SDI for us is turning your datacenter into resource pools with highly optimized infrastructure to make sure the apps and data we touch are sitting on the right types of platforms.

We see the shift happening. We saw the shift in the major cloud providers, and we want everybody to be part of that shift, because it allows them to drive down their costs and step up their innovation at the application layer.

How is distributed computing changing the enterprise outlook on application and datacenter performance?

You've heard of Jevon's paradox -- as you make it easier and more efficient to consume resources, people find new ways of using them. When we first did virtualization back in the day, people were scared the world of servers would shrink, but instead it grew. And the reason why is that as humans, when it gets easier and more efficient, we use it more and build more on top of it.

What we've seen in hyperscale environments that have switched to a containerized approach is demand for even more performance. It may appear that everything gets smaller and smaller and doesn't need performance, but it's the opposite. As people fine-tune their applications, for each thread they want the most performance they can attain.

You'll see Intel continue to focus heavily on our platforms for meeting the needs of the developers pushing innovation. It's a software maturity flow -- first they get excited working with distributed systems and scale-out infrastructure, then they focus on reliability, and then they want to focus on user response time.

Just like we focused in on performance of cores and threads, the next level is taking it across the entire datacenter -- a huge operating system that achieves all the performance that distributed applications need. I think that's a vision that Mesosphere and Intel have in common.

What's the connection between Cloud for All and the Cloud Native Computing Foundation, which was also announced this week with Intel (and Mesosphere) as a member?

It's all related. From a Cloud for All perspective, the key thing is understanding different applications, use cases and time frames when people make shifts. A perfect world might have everyone doing tight bin-packing and running hyperscale systems, but in reality that's not going to all happen at once. Some apps run great on containerized infrastructure, and many others don't.

The Open Container Initiative, announced last month, is tightly coupled with this, too. Essentially, we're trying to make sure that the right standards and support are in place at all levels of the cloud stack so companies can transition to cloud infrastructure in whatever fashion and whatever timeframes make sense to them.

Is there a connection between the work Intel is doing with Mesos and the work it's doing with OpenStack? Is there a marriage between the two technologies, or a nexus point?

There are two ways to look at it. The most simplistic is that people do run Mesos on top of Amazon Web Services today, and it can certainly run on top of a cloud platform like OpenStack, as well. Airbnb, for example, runs all of its Mesos workloads on Amazon today.

However, there's also the potential that Mesos could be the scheduler that actually runs something like OpenStack. So instead of Mesos on OpenStack, you would use Mesos to launch and manage resources for an OpenStack environment. In fact, Intel and Mesosphere submitted a proposal for the upcoming OpenStack Summit about exactly that.

If you look at what Google does today, for example, it runs everything inside Linux containers via its scheduler; Borg. Google Compute Engine is a cloud platform spinning up virtual machines for users, and it runs inside containers and is scheduled by Borg.

I'm glad to see there are options for teams that want to get creative about how they can best optimize their infrastructure. The natural inclination might be to run Mesos or the Datacenter Operating System on OpenStack nodes, but there might well be scenarios where it makes more sense to treat OpenStack as a service running inside Linux containers on Mesos.

Whatever the specific permutation, our focus is Cloud for All.